University of Oxford

University of OxfordI am currently a DPhil student in Autonomous Intelligent Machines and Systems (AIMS CDT) at the University of Oxford.

Through AIMS, I aim to contribute to the advancement of AI techniques that can be used in practical application to real-world problems, with a real capacity to benefit lives. I am particularly excited to apply my knowledge to create better, safer, and more effective AI tools.

Before starting my DPhil, I completed a Masters of Engineering in Discrete Mathematics at the University of Warwick, graduating at the top of my cohort with First Class Honours. During my degree, I completed a third-year project on an efficient 4D rendering algorithm for the Mandelbrot set, which won the department's outstanding project prize. I also undertook a summer research project on the theoretical foundations of machine learning, where I investigated the iterative instability of gradient descent.

In my free time, I enjoy playing a variety of tabletop games, learning new things, and turning curiosities of things I learn about into software solutions.

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Education

-

University of Warwick

University of WarwickMasters of Engineering, Discrete Mathematics

1st Year: 85.2%

2nd Year: 88.1% (awarded)

3rd Year: 88.3% (awarded)

4th Year: 80.2% (awarded)

All are a high first, >70% is a first.Sep. 2021 - present -

Ashby SchoolA levels

Ashby SchoolA levels

Computer Science: A* Maths: A* Further Maths: A* Physics: A* Sep. 2019 - Jul. 2021 -

Ashby SchoolLevel 2 Certificate in Further Maths (A^ top grade)

Ashby SchoolLevel 2 Certificate in Further Maths (A^ top grade)

10 GCSEs incl. Computer Science (8), Maths (9), Design and Technology (9), Physics (9), English Language (7) overall (3x 9, 2x 8, 3x 7, 2x 5)Sep. 2017 - Jul. 2019

Experience

-

University of OxfordDoctoral StudentOct. 2025 - Present

University of OxfordDoctoral StudentOct. 2025 - Present -

University of Warwick - URSS Research ProjectAI ResearcherJuly. 2024 - Sep. 2024

University of Warwick - URSS Research ProjectAI ResearcherJuly. 2024 - Sep. 2024 -

Rolls RoyceSoftware Development Work ExperienceAug 2021

Rolls RoyceSoftware Development Work ExperienceAug 2021 -

Rolls RoyceWork Experience WeekJune 2019

Rolls RoyceWork Experience WeekJune 2019

Honors & Awards

-

University of Warwick - Best overall graduating MEng student in Discrete Mathematics2025

-

Warwick Award (Silver)2025

-

University of Warwick - Outstanding Department of Computer Science Third-Year Project Prize2024

-

University of Warwick - Exceptional achievement in the second year for Discrete Maths2023

-

Ashby School - Outstanding achievement in computer science2019

Selected Projects (view all )

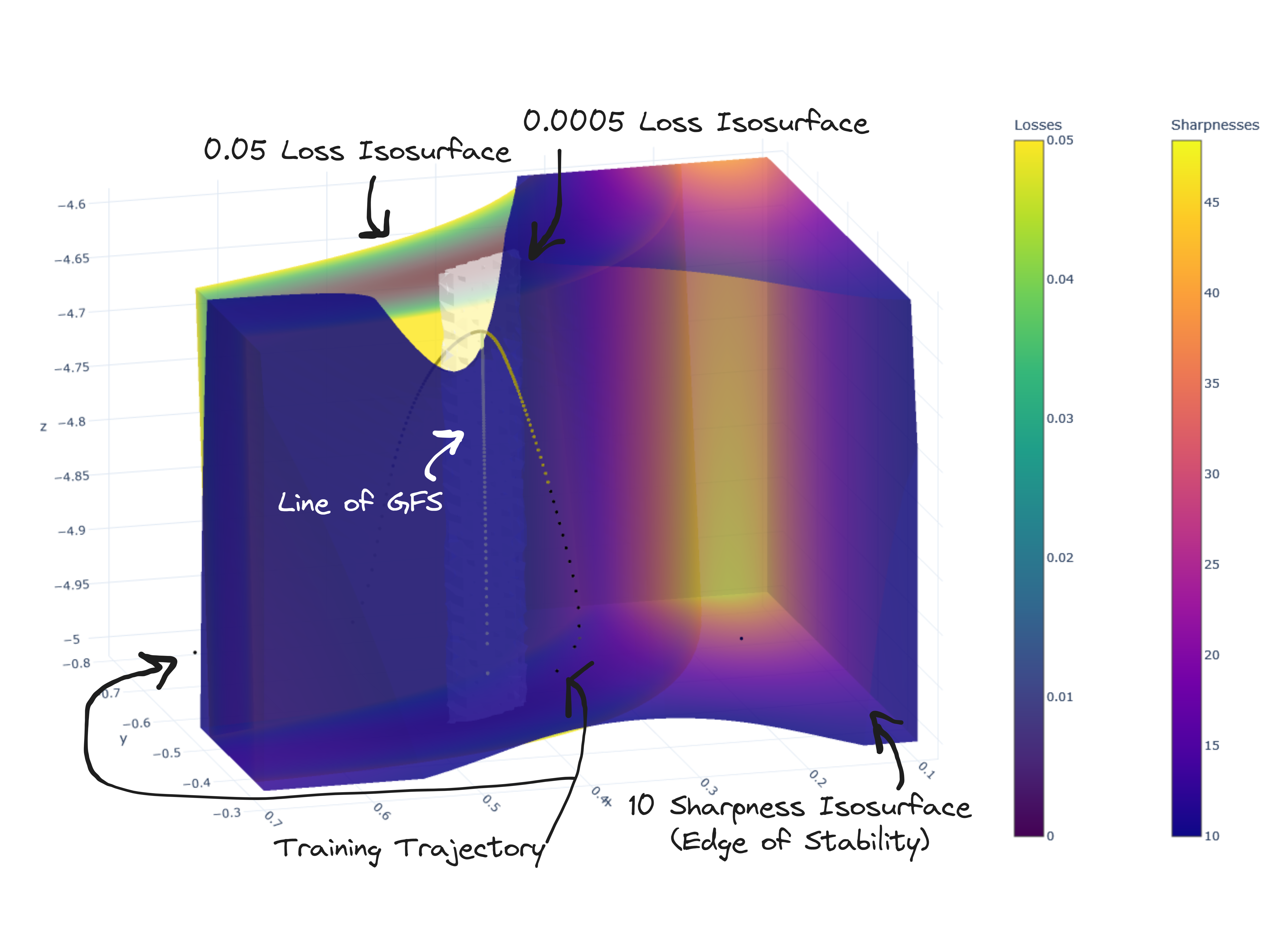

Investigating the Gradient Descent of Neural Networks at the Edge of Stability

Jonathan Auton, Ranko Lazic, Matthias Englert

URSS Showcase 2024

Artificial neural networks are a type of self-learning computer algorithm that have become central to the development of modern AI systems. The most used self-learning technique is gradient descent, a simple yet effective algorithm that iteratively improves a network by tweaking it repeatedly in a direction of improving accuracy. However, new findings suggest the step size cannot be made small enough to avoid the effects of iterative instability. As a result, the learning process tends to become chaotic and unpredictable. What is fascinating about this chaotic nature is that despite it, gradient descent still finds effective solutions. My project seeks to develop an understanding of the underlying mechanisms of this chaotic nature that is paradoxically effective.

Investigating the Gradient Descent of Neural Networks at the Edge of Stability

Jonathan Auton, Ranko Lazic, Matthias Englert

URSS Showcase 2024

Artificial neural networks are a type of self-learning computer algorithm that have become central to the development of modern AI systems. The most used self-learning technique is gradient descent, a simple yet effective algorithm that iteratively improves a network by tweaking it repeatedly in a direction of improving accuracy. However, new findings suggest the step size cannot be made small enough to avoid the effects of iterative instability. As a result, the learning process tends to become chaotic and unpredictable. What is fascinating about this chaotic nature is that despite it, gradient descent still finds effective solutions. My project seeks to develop an understanding of the underlying mechanisms of this chaotic nature that is paradoxically effective.

Interactive Exploration of 4D Fractal Orbits

Jonathan Auton, Marcin Jurdzinski

Master's Dissertation 2024

A Java software application for rendering a 4D representation of the Mandelbrot set. The project uses novel 4D projectional techniques to efficiently visualise and move in the space, and generalises well to alternate formulae for fractal generation.

Interactive Exploration of 4D Fractal Orbits

Jonathan Auton, Marcin Jurdzinski

Master's Dissertation 2024

A Java software application for rendering a 4D representation of the Mandelbrot set. The project uses novel 4D projectional techniques to efficiently visualise and move in the space, and generalises well to alternate formulae for fractal generation.